Ryan Howell

Nov 25, 2025

Elliott Round

Jul 31, 2023

At M-XR, our long term vision has been to make studio-quality material scanning available to the masses for both 2D textures and 3D objects. We're driven by the prospect of enabling anyone to create photorealistic digital scenes with relative ease, and what that creative potential will hold.

Typically, material capture is a complex task and can involve multiple high-end cameras and lights, but also an array of sophisticated sensors and filters. This inherent complexity often necessitates the use of expensive specialised hardware that is beyond the reach of most users.

Our strategy in confronting this challenge has been to focus solely on the foundational elements of these systems, cameras and lights, and not depend on the more advanced components of such scanners. In demonstrating that our method can capture materials effectively using just these two items, we have simplified the process dramatically.

By not being dependent on complex hardware from the outset, the transition to mobile devices is a much more feasible proposition, despite their inherent limitations, as mobile devices also consist of a camera and a light.

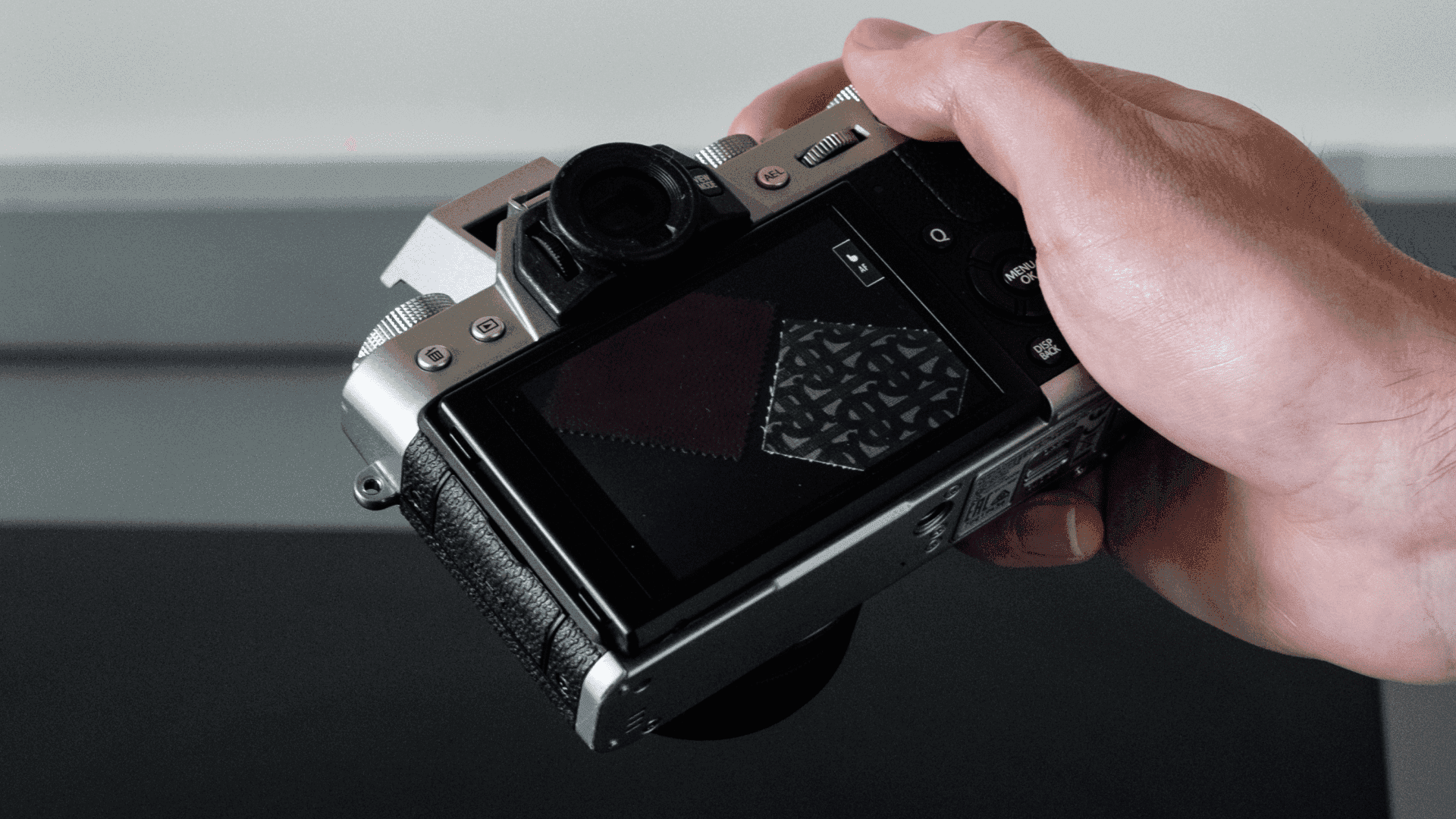

Before jumping straight to mobile-phone scanning, we have wanted to explore what we call omni-directional scanning. In this type of capture, we use a light source that is coupled to the camera – if you move the camera the light will move with it. Examples could range from the flash on a smartphone, or the built-in flash on a point-and-shoot camera, to an array of LEDs affixed to a camera. Read more about the different types of scanning here

In this process, the user circles around the subject, capturing all the necessary angles, much like any standard photogrammetry workflow. The key distinction lies in the fact that the camera's flash remains active during the entire capture process.

Subsequently, the captured data is processed through the same robust pipeline as any other M-XR scan. This ensures that the final results are delivered as expected, maintaining our commitment to the highest standards of quality and efficiency in material scanning irrespective of your budget.

We are thrilled to share some of our preliminary test results which successfully captured the diffuse, specular, specular-tint, roughness, and normals texture maps. These initial scans have offered promising evidence that swatch scanning with a single camera is not only feasible, but also highly effective. Our team is now focusing on further refining and perfecting this process.

In the upcoming months, we plan to conduct extensive testing on mobile devices. We've already performed tests using compressed JPEG photography (from DSLR) and observed only minimal degradation in quality—a truly encouraging outcome.

In order to set a solid foundation for further refinement, we've also captured 3D assets as a baseline. Much like our other processes, we've leveraged a similar approach to photogrammetry to capture the base mesh, using the camera's flash to illuminate the subject. Remarkably, we were able to execute this in a well-lit room by carefully selecting exposure settings that allowed the flash to effectively overpower ambient lighting.

Granted, the speaker isn't perfect and does display a certain degree of visual artefacts. However, the consistency of these results, coupled with the clear distinction between the rubber and fabric, further underscores the robustness of the '.mxr' format in facilitating a diverse array of scans. We are confident that such artefacts will be significantly reduced, if not completely eliminated, with continued development and research in this area.